It’s 1961 and George Devol has just received his patent for “ Programmed Article Transfer”. It reads as follows- “The present invention relates to the automatic operation of machinery, particularly the handling apparatus, and to automatic control apparatus suited for such machinery.” The Unimate 1900 series, which is the world’s first industrial robot, however, was actually born at a cocktail party in 1956. George Devol had just unveiled his latest invention, the Programmed Article Transfer device. It was at this cocktail party that George Devol met Joseph Engelberger (Father of Robotics), “Sounds like a robot to me” Joseph Engelberger exclaimed. In 1957 Engelberger, who at the time, was director of Consolidated Controls Corporation (A Condec subsidiary) convinced the CEO of Condec to develop George Devol’s device. Two years later and the first prototype of the Unimate #001 was built. Soon after, the first industrial robot was installed at the General Motors plant in New Jersey. The Unimate 1900 series was responsible for automating a lot of the assembly lines that existed at the time, with Japan being one of the first adapters of Industrial Robotics technology.

Today with more than 373,000 industrial robots,and a global market that is expected to grow upto $210 billion by 2025. It’s safe to say Robotics will play an integral role in the next industrial revolution. Today we use robots more than ever, and in many different industries and businesses. From medicine to marketing and warehousing & logistics, robots are quickly becoming part of daily business operations and in this blog we take a look at developments in the field of robotics in a few of these industries.

Medical Robots

The global market value for medical robots is expected to reach $12.7 billion by 2025 from a value of $5.9 billion in 2020. The key drivers of growth in this market are from robot-assisted rehabilitation, robot-assisted surgeries and the increasing adoption of robots in the healthcare industry. In this blog we take a look at robotics assisted surgery and a major player in this area is Intuitive Surgery the company behind the da Vinci surgical system. Maybe you remember the “did you know they did surgery on a grape” meme that was circulating around twitter in 2018, if you don’t, in 2018 a video from 2014 was shared widely on many platforms. The video showed surgeons peeling the skin off of a grape and then stitching it back together, well the instrument used in this video was the da Vinci surgical system. The impressive surgery showcased the ability of the robot to perform minimally invasive surgeries with great accuracy and dexterity.

Although the da Vinci surgical system has been around for more than 18 years, Intuitive Surgery has set the standard for robotics assisted surgeries and continues to do so. While the da Vinci surgical system has a limited scope of use at the moment, this is likely to change in the coming years as more patients opt for surgeries with minimal invasiveness. This shift towards robotics assisted surgeries saw a more drastic uptick in 2020 with the COVID-19 pandemic. As hospitals struggled to deal with rising critical cases, many hospital staffers found themselves amidst a shortage of Personal Protection Equipment, with many hospitals and clinics cancelling elective surgeries in order to prevent patients at risk from catching the virus. A possible workaround to ensure that safety measures are upheld, is the use of robotics assisted surgeries. As robots find their place in the surgical theatre they are also more frequently being used in Orthopaedics, prosthetics, psychotherapy and more. Robotics in the healthcare industry continues to grow and in the space of a few decades. From patient experience to surgeries, robotics will transform the healthcare industry.

Collaborative Robots

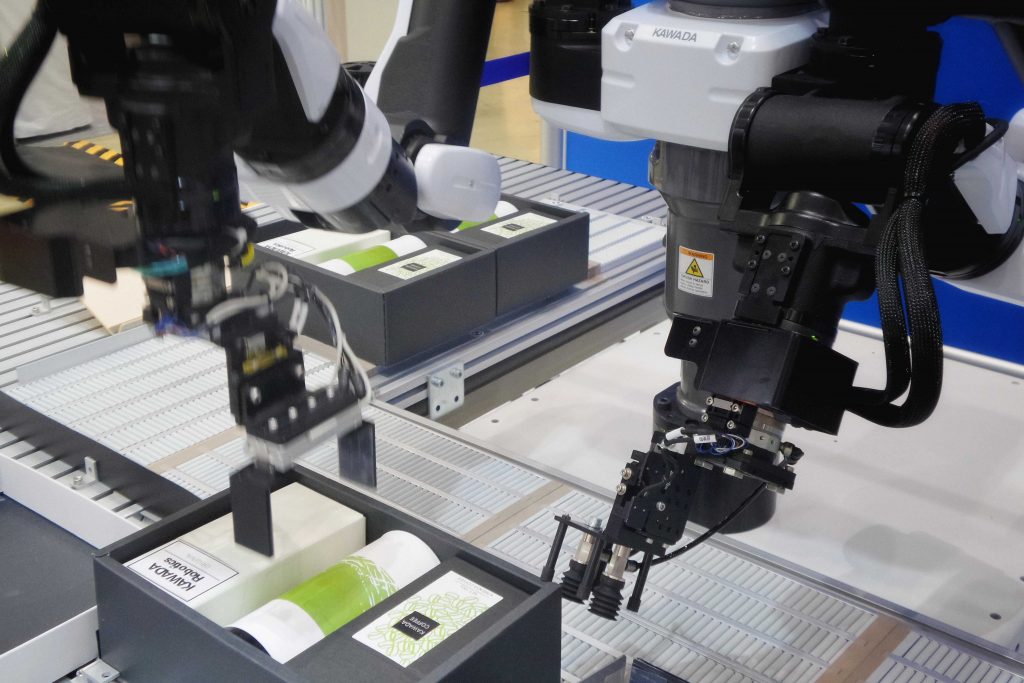

Collaborative robots or cobots are the next evolution in industrial robotics. First invented in 1996 by J. Edward Colgate and Michael Peshkin, the cobot has since developed into an integral part of Industry 4.0. These robots are designed for human-robot interaction within a shared space. How do they differ from the traditional industrial robot you’d find on any assembly line? While industrial robots are programmed to accomplish certain tasks, cobots are designed to interact with and collaborate with humans, they are designed to enhance human ability. Industrial robots on the other hand are not designed to interact with humans and operate in ‘cages’, which can be a safety hazard. These robots are capable of moving tons of material and as such can prove dangerous to humans trying to navigate the assembly line floor and as such are often programmed to stop when a human enters its vicinity. This can lead to performance and safety issues, collaborative robots on the other hand can monitor their environment and continue to work without observing a decline in performance or safety. Cobots are especially important for businesses now more than ever as we continue to innovate into the future. As technologies such as the Internet of Things, 5G technology and Big Data are developed, cobots find its place in industry with great synergy with these technologies.

The global market for cobots was valued at $649 million in 2018 and is expected to grow at a rate of 44.5% from 2020 to 2025. Obviously the collaborative robot market is still new, the first company to offer collaborative robots was Cobotics, founded by J. Edward Colgate and Michael Peshkin in 1997. The company offered Cobots in the automobile assembly line, and offered models that used ‘Hand-assisted Control’. As of now Universal Robots has established itself as a market leader in the field, releasing cobots with applications in education, entertainment, manufacturing and research.

Automated Warehouses

In the last 20 years, warehouses and logistics have expanded exponentially. As industries innovate and adapt so have warehouses and logistics, with the rise of online retail and e-commerce we’ve seen warehouses and logistics become increasingly automated. Technology such as the Internet of Things, Artificial Intelligence and sensor technology continue to be developed at a fast rate today, and this has supported the growth of the automated warehousing market. In 2015 this market was valued at $15 billion, by 2026 it’s expected to be worth $30 billion. A major reason for why more and more businesses are turning to automated solutions lies in the benefits offered by automating their processes. Integrating AI into their warehousing enables businesses to benefit from increased productivity and better accuracy, better sensor technology working in tandem with AI can improve working conditions in a warehouse and integrating these technologies into warehouse management software can lead to better oversight and tracking, ideally it would work as the hub of warehouse operations.

What kind of robots would you find in an automated warehouse? Warehousing robots usually fall into three categories; Automated Guided Vehicles (AGV), Autonomous Mobile Robots and Aerial Drones.

Automated guided vehicles act as carts that carry inventory to different parts of the warehouse, they are usually guided by magnetic strips or move along tracks laid out in the warehouse. 2XL a logistics company based in Belgium has automated their warehouses with AGVs, these AGVs replaced workers who previously spent most of their time walking from one part of the warehouse to the other. Since the AGVs took over the company observed increased warehouse efficiency at lower costs, since the AGV could work through the night and on week-ends for the same price if they operated in the day.

Autonomous Mobile Robots have the same function as AGVs however they do not need magnetic strips or a track to navigate the warehouse. As Autonomous Mobile Robots have the hardware and software to map their environment as well as sensors to navigate obstacles. These robots are smaller and so do not carry heavy payloads and instead are an asset in sorting inventory in the warehouse. Due to their smaller size they are able to navigate and identify information on packages.

Aerial drones in warehousing and logistics generally refers to it being used as an aerial delivery device. While this may still seem like wishful thinking, aerial drones can still be used to optimize warehouse processes. Since drones can fly they can navigate the warehouse more quickly and easily. They can scan for inventory, and interact with the warehouse management system. While today they require a human operator, the vision is for a more automated drone integration.

Telepresence Robots

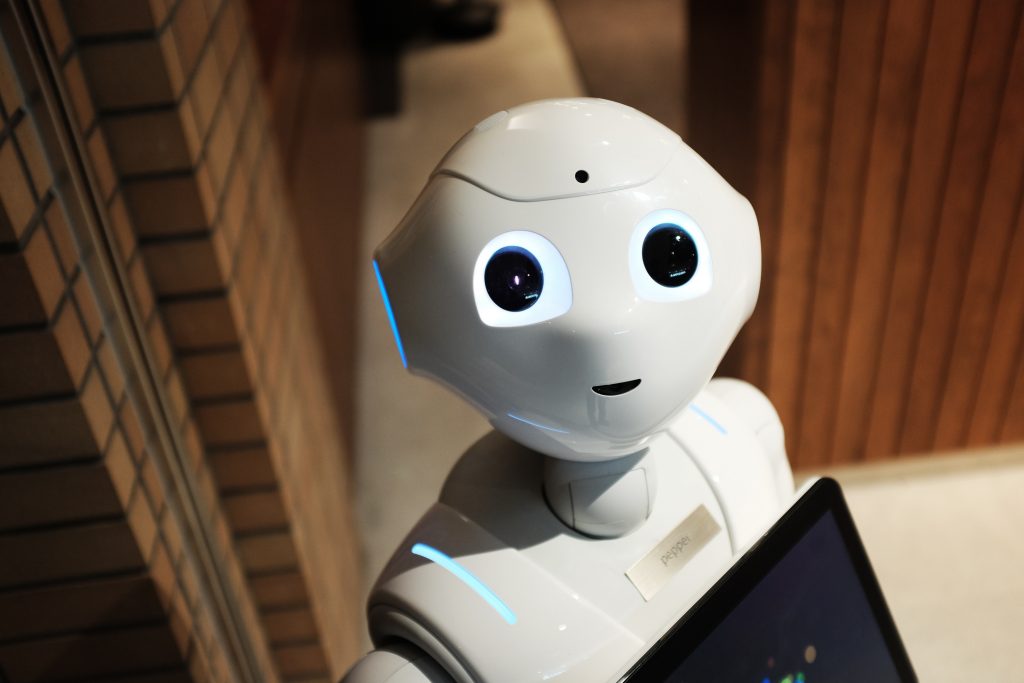

If any of our readers have binged Modern Family they probably remember the episode where Phil Dunphy can’t make it to a family gathering and instead chooses to insert himself into the events via an iPad on wheels. Telepresence robots can be summarized as iPads on wheels, as they are an operator controlled robot which has a video camera, a mic, a screen and speakers so that individuals can be ‘present’ in an environment. Telepresence robots have found its way in almost any working environment, schools, clinics, warehouses, corporate offices etc.

With the COVID-19 pandemic, countries went into a lockdown which made many businesses employ work from home measures. As the lockdown cut down on human contact, some sectors suffered more than others from the lack of human contact, especially the social and healthcare sectors. Many businesses, clinics and offices began to look for ways to bridge this gap in human contact by adopting a more immersive telepresence experience. While most of these robots are literally just tablets on wheels that are using Zoom or Portal, the contribution from these robots are not to be taken lightly. These robots were able to have a positive effect on patient morale especially in elderly patients. There are more sophisticated telepresence robots that are more humanoid and are often used in care homes, such as the Pepper robot from Softbank. As businesses take an outlook on the future that has more distanced meetings and conferences, it makes sense to invest in telepresence robotics in order to have a more physical presence for participants in the meeting and establishing more concrete relationships over a screen.